We can give instructions to a fellow human by:

- Talking to them

- Handwriting a note

- Typing a text

- Waving a flag

- Triggering a traffic device

- Sounding a siren

- Sending a memo

- Choosing from a list of choices on a menu

- Making a facial expression

- and perhaps a dozen or more other methods…

Most people develop voiceboxes and limbs and facial expressions that make any of these usable. Computers, over the decades, have had to have them engineered.

In 1983, Dan Lovy built a parser for the adventure games I was marketing at Spinnaker. Suddenly, you could type instructions into the game instead of relying on the more emotional but crude joystick for input. So, “pick up the dragon’s pearl” was something the game could understand.

There’s a restaurant in the Bronx where the waiter asks, “what do you want?” There’s no menu. If you imagine something in a certain range, they’ll make it. This is stressful, because we’re used to the paradigm of multiple choice in this setting.

A smart doctor doesn’t ask, “what’s wrong?” Instead, she takes a few minutes to notice, converse and connect, because our fear of mortality gets in the way of a truthful analysis.

As the worlds of tech and humanity merge, it’s worth thinking hard about the right way to engage with a device. When a car invites you to talk with it, the car designer is betting our lives that the car will actually respond to the vagaries of speech in a specific way. Perhaps a steering wheel is a better user interface.

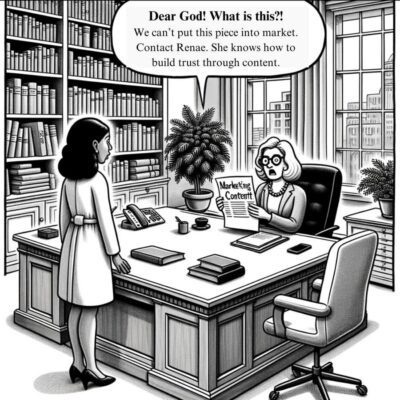

(And it’s not just a car–sometimes we fail to communicate with each other in a useful and specific way that matches the work to be done…)